Op-Ed: Don’t ban chatbots in classrooms — use them to change how we teach

Will chatbots that can generate sophisticated prose destroy education as we know it? We hope so.

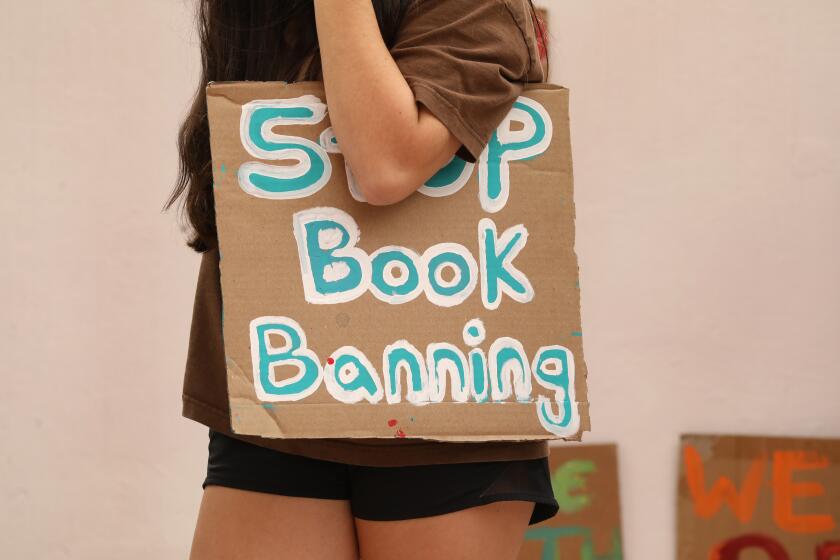

New York City’s Department of Education recently banned the use of ChatGPT, a bot created by OpenAI with a technology called the Generative Pretrained Transformer.

“While the tool may be able to provide quick and easy answers to questions,” says the official statement, “it does not build critical-thinking and problem-solving skills, which are essential for academic and lifelong success.”

We disagree; it can and should.

Banning such use of artificial intelligence from the classroom is an understandable but nearsighted response. Instead, we must find a way forward in which such technologies complement, rather than substitute for, student thinking. One day soon, GPT and similar AI models could be to essay writing what calculators are to calculus.

We know that GPT is the ultimate cheating tool: It can write fluent essays for any prompt, write computer code from English descriptions, prove math theorems and correctly answer many questions on law and medical exams.

Banning ChatGPT is like prohibiting students from using Wikipedia or spell-checkers. Even if it were the “right” thing to do in principle, it is impossible in practice. Students will find ways around the ban, which of course will necessitate a further defensive response from teachers and administrators, and so on. It’s hard to believe that an escalating arms race between digitally fluent teenagers and their educators will end in a decisive victory for the latter.

AI is not coming. AI is here. And it cannot be banned.

So, what should we do?

Educators have always wanted their students to know and to think. ChatGPT beautifully demonstrates how knowing and thinking are not the same thing. Knowing is committing facts to memory; thinking is applying reason to those facts. The chatbot knows everything on the internet but doesn’t really think anything. That is, it cannot do what the education philosopher John Dewey, more than a century ago, called reflective thinking: “active, persistent and careful consideration of any belief or supposed form of knowledge in the light of the grounds that support it.”

Technology has for quite a while been making rote knowledge less important. Why memorize what the 14th element in the periodic table is, or the 10 longest rivers in the world, or Einstein’s birthday, when you can just Google it? At the same time, the economic incentives for thinking, as opposed to knowing, have increased. It is no surprise that the average student thinks better but knows less than counterparts from a century ago.

Chatbots may well accelerate the trend toward valuing critical thinking. In a world where computers can fluently (if often incorrectly) answer any question, students (and the rest of us) need to get much better at deciding what questions to ask and how to fact-check the answers the program generates.

How, specifically, do we encourage young people to use their minds when authentic thinking is so hard to tell apart from its simulacrum? Teachers, of course, will still want to proctor old-fashioned, in-person, no-chatbot-allowed exams.

But we must also figure out how to do something new: How to use tools like GPT to catalyze, not cannibalize, deeper thinking. Just like a Google search, GPT often generates text that is fluent and plausible — but wrong. So using it requires the same cognitive heavy lifting that writing does: deciding what questions to ask, formulating a thesis, asking more questions, generating an outline, picking which points to elaborate and which to drop, looking for facts to support the arguments, finding appropriate references to back them up and polishing the text.

GPT and similar AI technology can help with those tasks, but they can’t (at least in the near future) put them all together. Writing a good essay still requires lots of human thought and work. Indeed, writing is thinking, and authentically good writing is authentically good thinking.

One approach is to focus on the process as much as the end result. For instance, teachers might require — and assess — four drafts of an essay, as the writer John McPhee has suggested. After all, as McPhee said, “the essence of the process is revision.” Each draft gets feedback (from the teacher, from peers, or even from a chatbot), then the students produce the next draft, and so on.

Will AI one day surpass human beings not just in knowing but in thinking? Maybe, but such a future has yet to arrive. For now, students and the rest of us must think for ourselves.

GPT is not the first, nor the last, technological advance that would seem to threaten the flowering of reason, logic, creativity and wisdom. In “Phaedrus,” Plato wrote that Socrates lamented the invention of writing: “It will implant forgetfulness in their souls; they will cease to exercise memory because they rely on that which is written, calling things to remembrance no longer from within themselves, but by means of external marks.” Writing, Socrates thought, would enable the semblance of wisdom in a way that the spoken word would not.

Like any tool, GPT is an enemy of thinking only if we fail to find ways to make it our ally.

Angela Duckworth is a psychology professor at the University of Pennsylvania who studies character development in adolescence. Lyle Ungar is a computer science professor at the University of Pennsylvania who focuses on artificial intelligence.

More to Read

A cure for the common opinion

Get thought-provoking perspectives with our weekly newsletter.

You may occasionally receive promotional content from the Los Angeles Times.