Op-Ed: To keep social media from inciting violence, focus on responses to posts more than the posts themselves

When Facebook tried to get its external Oversight Board to decide whether it should ban Donald Trump permanently, the board demurred and tossed the hot potato back to Facebook, ordering the company to make the final call within six months. But one person had unwittingly offered a vital lesson in content moderation that Facebook and other tech companies have so far missed — Trump himself.

It’s this: To predict the impact of inflammatory content, and make good decisions about when to intervene, consider how people respond to it.

Trying to gauge whether this or that post will tip someone into violence by its content alone is ineffective. Individual posts are ambiguous, and besides, they do their damage cumulatively, like many other toxic substances.

To put it another way, judging posts exclusively by their content is like studying cigarettes to understand their toxicity. It’s one useful form of data, but to understand what smoking can do to people’s lungs, study the lungs, not just the smoke.

If social media company staffers had been analyzing responses to Trump’s tweets and posts in the weeks before the Jan. 6 attack on the Capitol, they would have seen concrete plans for mayhem taking shape, and they could have taken action when Trump was still inciting violence, instead of after it occurred.

It was 1:42 a.m. back on Dec. 19 when Trump tweeted: “Big protest in D.C. on January 6th. Be there, will be wild!” The phrase “will be wild” became a kind of code that appeared widely on other platforms, including Facebook. Trump did not call for violence explicitly or predict it with those words, yet thousands of his followers understood him to be ordering them to bring weapons to Washington, ready to use them. They openly said so online.

Members of the forum TheDonald reacted almost instantly to the insomniac tweet. “We’ve got marching orders, bois,” read one post. And another: “He can’t exactly openly tell you to revolt. This is the closest he’ll ever get.” To that came the reply: “Then bring the guns we shall.”

Their riot plans were unusual, fortunately, but the fact that they were visible was not. People blurt things out online. Social media make for a vast vault of human communication that tech companies could study for toxic effects, like billions of poisoned lungs.

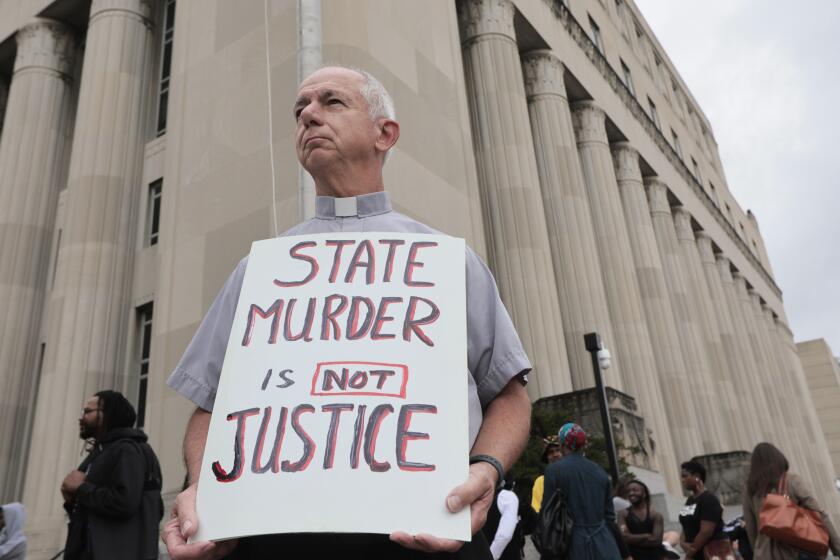

To better prevent extremist violence, the companies should start by building software to look for certain kinds of shifts in responses to the posts of powerful figures around the world. They should concentrate on account holders who purvey and attract vitriol in equal measure — politicians, clerics, media celebs, sidekicks who post snark and slander on behalf of their bosses, and QAnon types.

The software can search for signs that these influencers are being interpreted as endorsing or calling for violence. Algorithm builders are adept at finding tags that indicate all kinds of human behavior; they do it for purposes such as making ads more effective. They can find signals that flag violence in the making as well.

Nonetheless, no intervention should be made on the basis of software alone. Humans must review what gets flagged and make the call as to whether a critical mass of followers is being dangerously incited.

What counts as crucial to the decision making depends on context and circumstances. Moderators would have to filter out those who post false plans for violence. They have practice at this; attempts to game content moderation systems are common.

And they would work through a checklist of factors. How widespread is the effect? Are those responding known threats? Do they have access to weapons and to their intended victims? How specific are their schemes?

When moderators believe a post has incited violence, they would put the account holder on notice with a message along these lines: “Many of your followers understand you to be endorsing or calling for violence. If that’s not your intention, please say so clearly and publicly.”

If the account holder refuses, or halfheartedly calls on their followers to stand down (as Trump did), the burden would shift back to the tech company to intervene. It might begin by publicly announcing its findings and its attempt to get the account holder to repudiate violence. Or it might shut down the account.

This “study the lungs” method won’t always work; in some cases it may generate data too late to prevent violence, or the content will circulate so widely that social media companies’ intervention won’t make enough of a difference. But focusing on responses and not just initial posts has many advantages.

First, when it came to it, companies would be taking down content based on demonstrated effects, not contested interpretations of a post’s meaning. This would increase the chances that they would curb incitement while also protecting freedom of expression.

This approach would also provide account holders with notice and an opportunity to respond. Now, posts or accounts are often taken down summarily and with minimal explanation.

Finally, it would establish a process that treats all posters equitably, rather than, as now, tech companies giving politicians and public figures the benefit of the doubt, which too often lets them flout community standards and incite violence.

In September 2019, for example, Facebook said it would no longer enforce its own rules against hate speech on posts from politicians and political candidates. “It is not our role to intervene when politicians speak,” said Nick Clegg, a Facebook executive and former British office holder. Facebook would make exceptions “where speech endangers people,” but Clegg didn’t explain how the company would identify such speech.

Major platforms such as Twitter and Facebook have also given politicians a broader free pass with a “newsworthiness” or “public interest” exemption: Almost anything said or written by public figures must be left online. The public needs to know what such people are saying, the rationale goes.

Certainly, private companies should not stifle public discourse. But people in prominent positions can always reach the public without apps or platforms. Also, on social media the “public’s right to know” rule has a circular effect, since newsworthiness goes up and down with access. Giving politicians an unfiltered connection grants them influence and makes their speech newsworthy.

In Trump’s case, social media companies allowed him to threaten and incite violence for years, often with lies. Facebook, for example, intervened only when he blatantly violated its policy against COVID-19 misinformation, until finally suspending his account on Jan. 7, long after his followers breached the Capitol. By waiting for violence to occur, companies made their rules against incitement to violence toothless.

Whatever Facebook’s final decision about banning the former president, it will come too late to prevent the damage he did to our democracy with online incitement and disinformation. Tech companies have powerful tools for enforcing their own rules against such content. They just need to use them effectively.

Susan Benesch is the founder and director of the Dangerous Speech Project, which studies speech that inspires intergroup violence and ways to prevent it while protecting freedom of expression. She is also faculty associate at the Berkman Klein Center for Internet & Society at Harvard University.

More to Read

A cure for the common opinion

Get thought-provoking perspectives with our weekly newsletter.

You may occasionally receive promotional content from the Los Angeles Times.