Edison, Morse ... Watson? Artificial intelligence poses test of who’s an inventor

Computers using artificial intelligence are discovering medicines, designing better golf clubs and creating video games.

But are they inventors?

Patent offices around the world are grappling with the question of who — if anyone — owns innovations developed using AI. The answer may upend what’s eligible for protection and who profits as AI transforms entire industries.

“There are machines right now that are doing far more on their own than to help an engineer or a scientist or an inventor do their jobs,” said Andrei Iancu, director of the U.S. Patent and Trademark Office. “We will get to a point where a court or legislature will say the human being is so disengaged, so many levels removed, that the actual human did not contribute to the inventive concept.”

U.S. law says only humans can obtain patents, Iancu said. That’s why the patent office has been collecting comments on how to deal with inventions created through artificial intelligence and is expected to release a policy paper this year. Likewise, the World Intellectual Property Organization, an agency within the United Nations, along with patent and copyright agencies around the world are also trying to figure out whether existing laws or practices need to be revised for AI inventions.

The debate comes as some of the largest global technology companies look to monetize massive investments in AI. Google Chief Executive Sundar Pichai has described AI as “more profound than fire or electricity.” Microsoft Corp. has invested $1 billion in the research company Open AI. Both companies have thousands of employees and researchers pushing to advance the state of the art and move AI innovations into products.

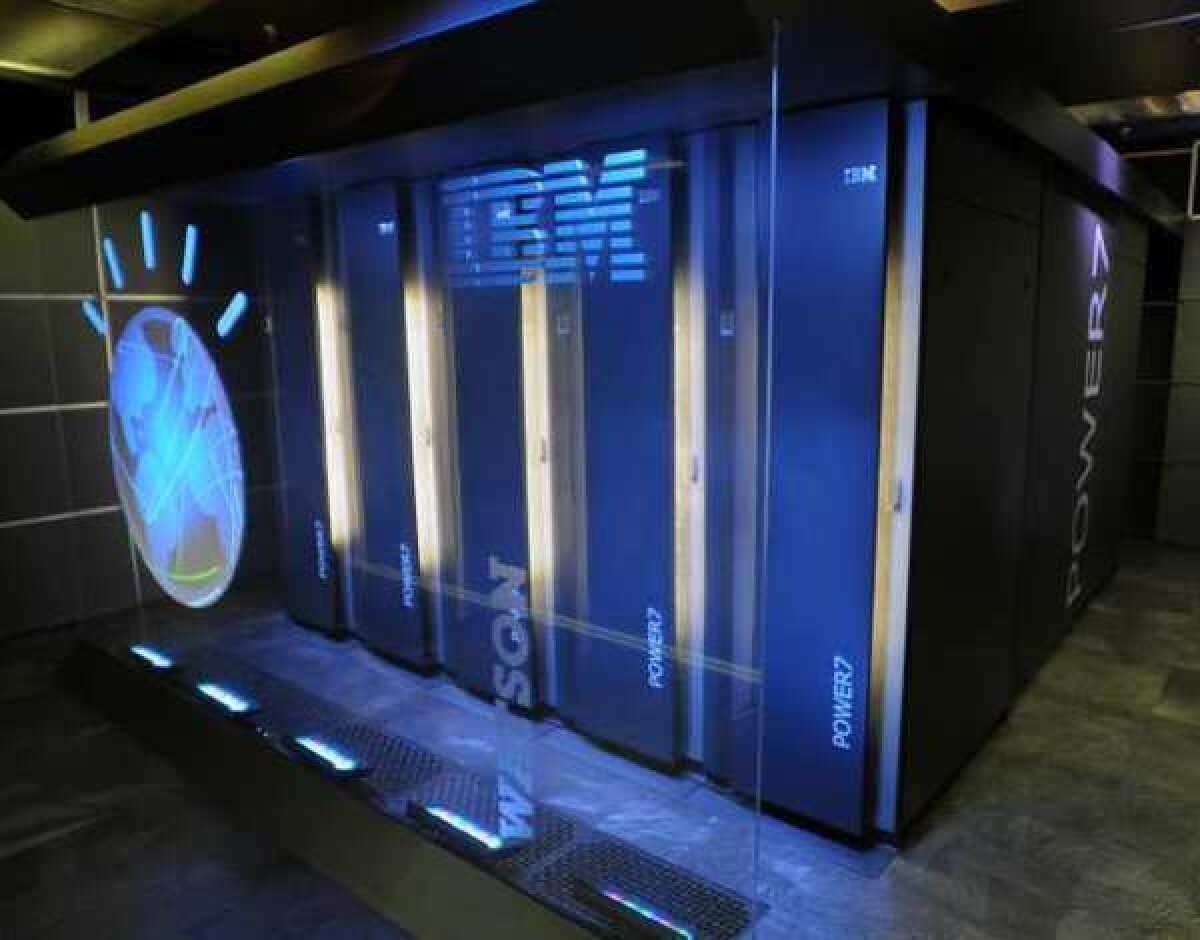

IBM Corp.’s supercomputer Watson is working with MIT on a research lab to develop new applications of AI in different industries, and some of China’s biggest companies are giving U.S. companies a run for their money in the field.

The European Patent Office last month rejected applications by the owner of an AI “creativity machine” named Dabus, saying that there is a “clear legislative understanding that the inventor is a natural person.” In December, the United Kingdom’s Intellectual Property Office turned down similar petitions, noting AI was never contemplated when the law was written.

“Increasingly, Fortune 100 companies have AI doing more and more autonomously, and they’re not sure if they can find someone who would qualify as an inventor,” said Ryan Abbott, a law professor at the University of Surrey in England. “If you can’t get protection, people may not want to use AI to do these things.”

Abbott and Stephen Thaler, founder of Imagination Engines Inc. in St. Charles, Mo.,

filed patent applications in numerous countries for a food container and a “device for attracting enhanced attention,” listing Thaler’s machine Dabus as the inventor.

The goal, Abbott said, was to force patent offices to confront the issue. He advocates listing the computer that did the work as the inventor, with the business that owns the machine also owning any patent. It would ensure that companies can get a return on their investment and maintain a level of honesty about whether it’s a machine or a human that’s doing the work, he said.

Businesses “don’t really care who’s listed as an inventor, but they do care if they can get a patent,” Abbott said. “We really didn’t design the law with this in mind, so what do we want to do about it?”

Still, to many AI experts and researchers, the field is nowhere near advanced enough to consider the idea of an algorithm as an inventor.

“Listing an AI system as a co-inventor seems like a gimmick rather than a requirement,” said Oren Etzioni, head of the Allen Institute for Artificial Intelligence in Seattle. “We often use computers as critical tools in generating patentable technology, but we don’t list our tools as co-inventors. AI systems don’t have intellectual property rights — they are just computer tools.”

The current state of the art in AI should put this question off for a long time, said Erik Brynjolfsson, director of the MIT Initiative on the Digital Economy, who suggested the debate might be more appropriate in a “century or two.” Researchers are “very far from artificial general intelligence like ‘The Terminator.’”

It’s not just who’s listed as the inventor that is flummoxing patent agencies.

Software thus far can’t follow the scientific method — independently developing a hypothesis and then conducting tests to prove or disprove it. Instead, AI is more often used for “brute force,” situations in which it would simply “churn through a bunch of possibilities and see what works,” said Dana Rao, general counsel for Adobe Inc.

“The question is not ‘Can a machine be an inventor?’ It’s ‘Can a machine invent?’” Rao said. “It can’t in the traditional way we view invention.”

A patent is awarded to something that is “new, useful and non-obvious.” Often, that means figuring out what a person with “ordinary skill” in the field would understand to be new — for instance, a knowledgeable laboratory researcher. That analysis gets skewed when courts and patent offices have to compare the work of a software program that can analyze an exponentially greater number of options than even a large team of human researchers.

“The bar is changing when you use AI,” said Kate Gaudry, a patent lawyer with Kilpatrick Townsend & Stockton in Washington. “However this is decided, we have to be consistent.”

Iancu likened it to debates a century ago over awarding copyrights to photographs taken with a camera.

“Somebody must have created the machine, somebody must have trained the machine and somebody must have pushed the ‘on’ button,” he said. “Do we think those activities are enough to count as human contributions to the invention process? If yes, the current law is enough.”

Still, Rao said, there needs to be some way to help companies using AI to protect their ideas. That’s particularly true for copyrights on photographs created through a type of machine learning system known as generative adversarial networks.

“If I want to create images to sell them, there needs to be ways of determining ownership,” Rao said.

Abbott said one option would be similar to a law in Britain, where computer-generated artistic works are given a shorter copyright term than those created by a human. The U.S. requires copyright owners to be human, and famously denied a registration request on behalf of a macaque monkey that had taken a “selfie” with a British nature photographer’s camera.

The evolution of machine learning and neural networks means that, at some point, the role of humans in certain types of innovation will decrease. In those cases, who will own the inventions is a question that’s crucial to companies using AI to develop new products.

Iancu said he sees AI as full of promise and notes that agencies have had to address such weighty questions before, such as genetically modified animals created in a lab, complex mathematics use for cryptography and synthetic DNA.

“It’s one of these things where hopefully, the various jurisdictions around the world can discuss these issues before it’s too late, before we have to play catch-up,” Iancu said.

More to Read

Inside the business of entertainment

The Wide Shot brings you news, analysis and insights on everything from streaming wars to production — and what it all means for the future.

You may occasionally receive promotional content from the Los Angeles Times.